If you are managing a multi-account environment in a cloud provider, you know the importance of having backups of your resources. Managing backups at scale can be a hard task, so AWS got your back. With AWS Backups you can manage your backups across all your accounts in a decentralized or centralized manner. AWS Backups is a managed services, easy to setup and include multiple options. If you are working on a regulated environment and you want to provide evidences that your environment is fully backup covered, AWS Backups offers the possibility to check for your compliance. Do you also have a requirement to make sure your backups can be restore? AWS Backups also offers features to restore your backed-up resources automatically.

In this series of articles I will do a deep dive on the service and how to setup your backups architecture using AWS Backups at scale in a multi-account environment. Let’s get started!.

Table of contents

Open Table of contents

Basic concepts

- Backup plans: In AWS Backup world, the backup plans are a set of rules and policies that you can deploy with infrastructure as code which defines when, what and how your backup needs to perform. Later in the articles I will explain in great details which options you have available. You can create multiple backup plans, for instance, one plan for daily backups and another for weekly backups. When you create the backup plan you can associate resources that you want to backup. The strategy that you use for creating your plans will depends on the resources you want to backup, your compliance requirements on doing backup and the layers of backups you want to add.

- Incremental backups: This is an important concept from cost optimization perspective. The first time a backup plan is triggered, it creates a fully copy of your data, after that first backup the consecutives backups are incrementals, so only it backups that data that changed from the previous backup point. The backups are retain as long as the time you configure on your retention time. It’s recommended to set up the retention period of the backups at least of one week. That’s is because if you set a shorter retention period, you will have the risk that the backup that you configure takes full backup of the data every time because does not exist a previous backup point, creating innecessary costs.

- Recovery points: Every backup performed by AWS Backup is called recovery point.

- Backup vault: As it names indicates is a vault where all our backups are going to stored. The vaults are immutable as nature, so the backups taken can not be modified once stored. The recovery points can not be modified but can be deleted by account owners, so iIf you want to additional lock on the recovery points, you can add object lock (compliance and governance). I will explain you later in the articles how to configure that. The locking offers an extra layer of protection for your recovery points. The backups are encrypted at rest using KMS keys. The encryption key that you set for the vault is the one that you use for encrypting your recovery points, which is different from the one that you encrypt the original data.

- Backup jobs: The backup job is the single unit operation that triggers on the backup plan. It’s the actual operation to create the backup of the configured resources on the backup plan which will be store on the designed vault, creating a new restore point.

Supported services

One of the advantages of using AWS Backup is that you do not need to configure the backup on the individual services, but you can do in a centralize manner from the AWS Backup service. Not every resource in AWS is supported by AWS Backup. Depending on the service and the region not every AWS Backup feature is supported, but in those articles I will focus on the most common services and the features available for all of them which are enough to create a Backup as a Service in your platform.

The services we cover are: S3, DynamoDB, EC2, EFS, EBS, RDS and Aurora.

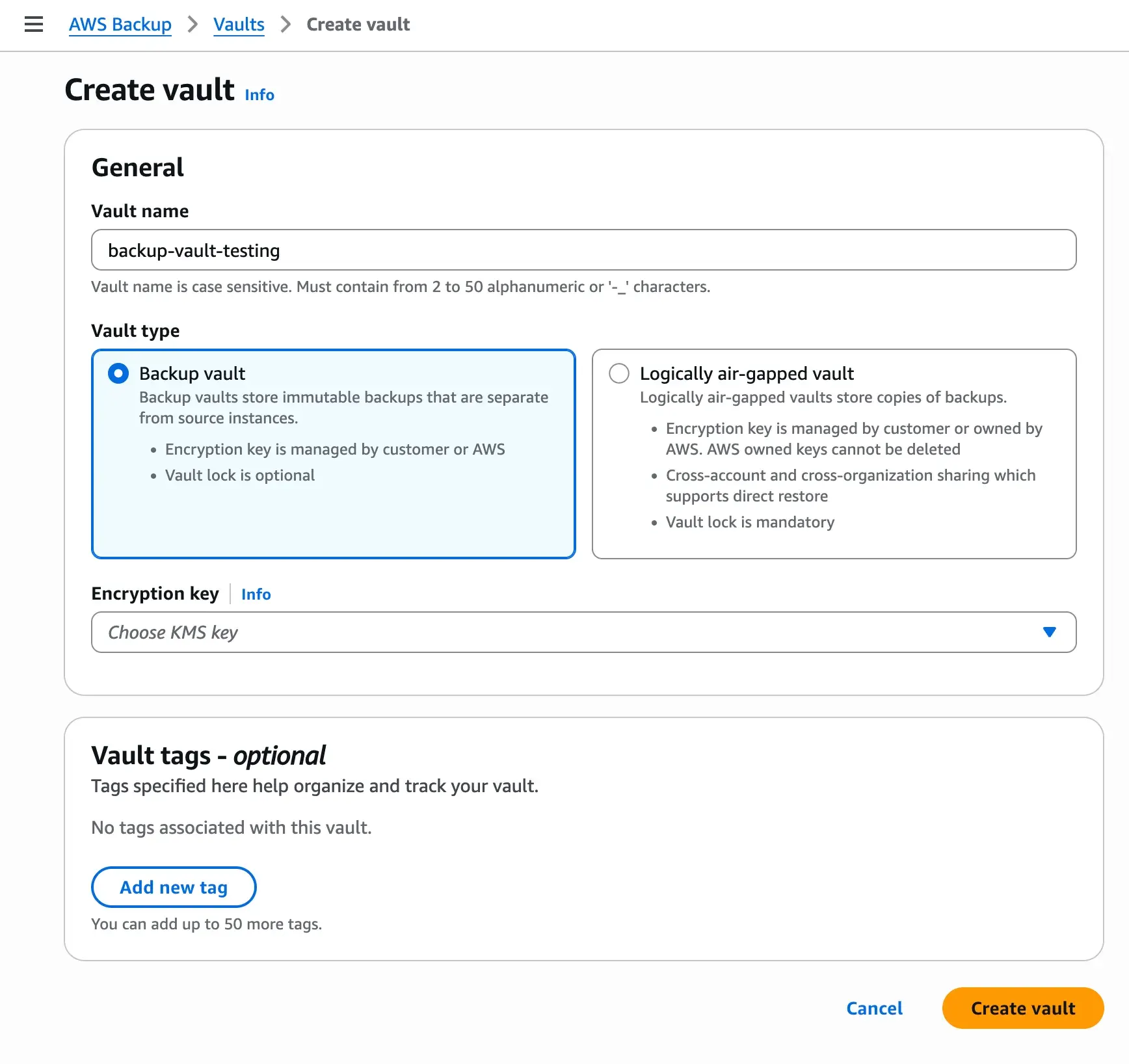

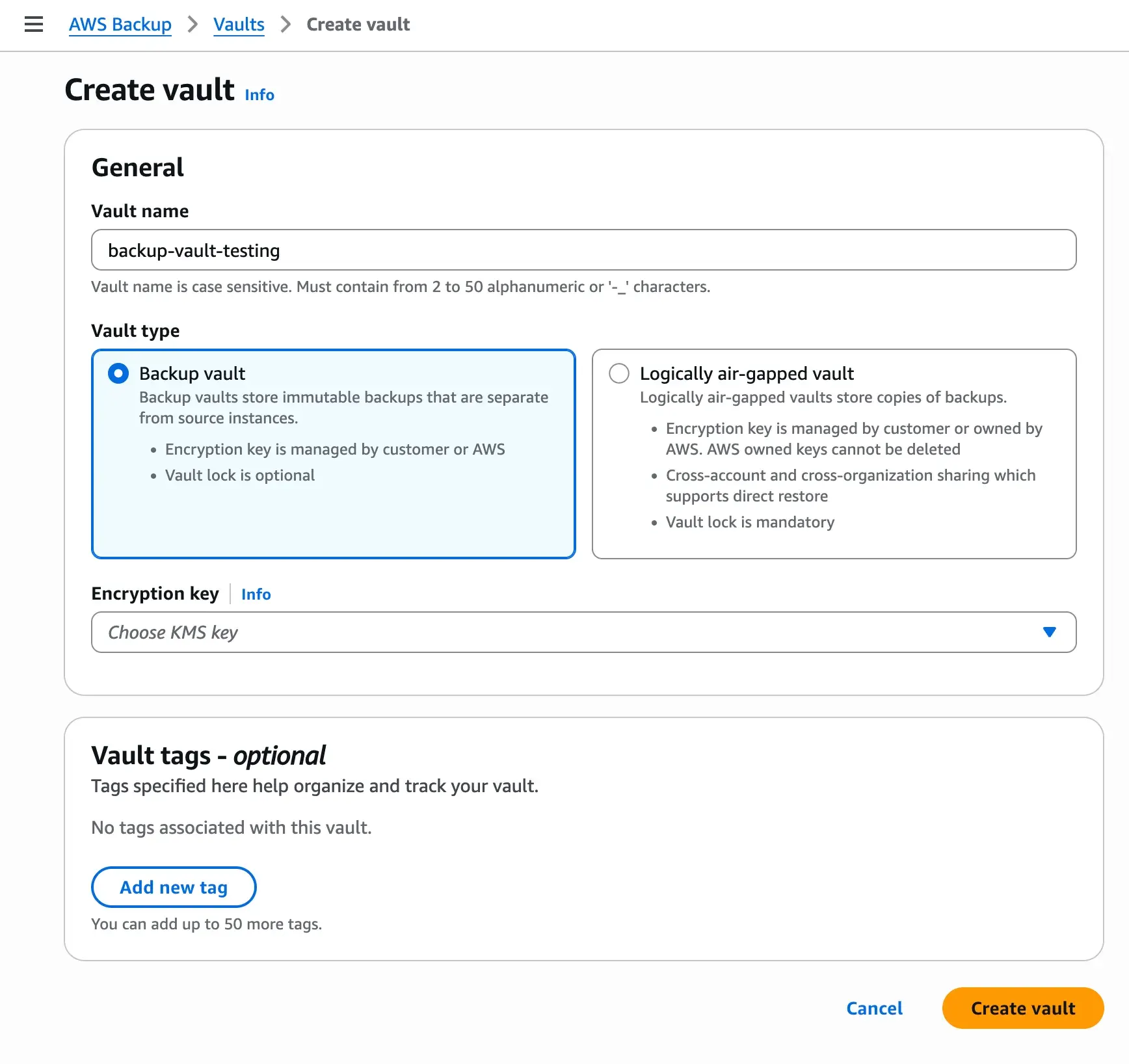

Creating a backup vault

Our first stop on this deep dive is the creation of our backup vault. Our vault will store all the recovery points created by our backup plans. First, I will show you how to do the full setup on the console, so I can explain in details important concepts which are required to understand when we translate the management console operations on infrastructure as code.

Creating the vault is fairly simple. In this example, for sake of simplicity we won’t set any custom KMS key. When creating the vault without custom KMS, AWS backup will create one managed key for us. For production environment is highly recommended using our provisioned custom KMS. In our scenario, we just need to give the name of the vault.

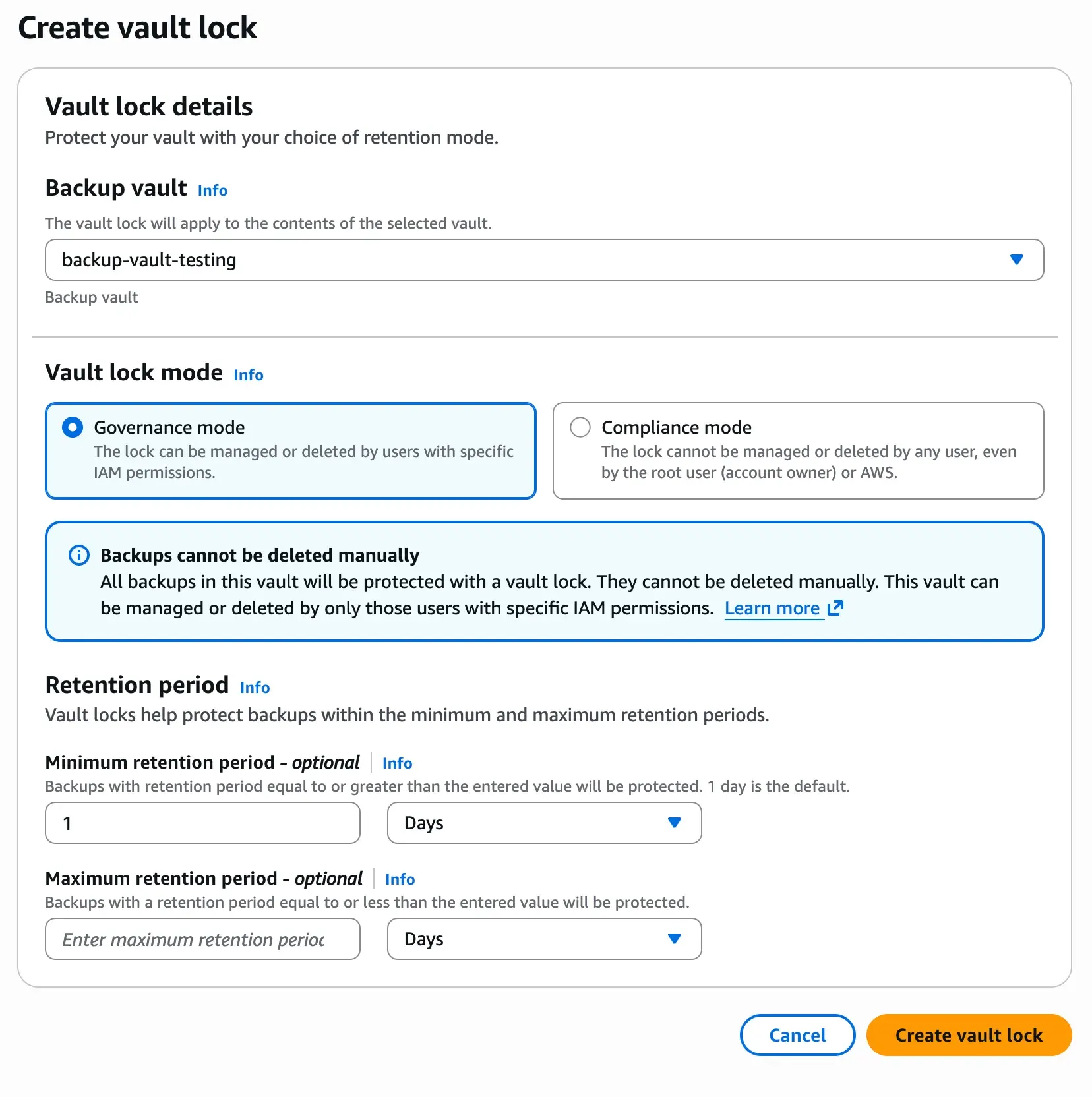

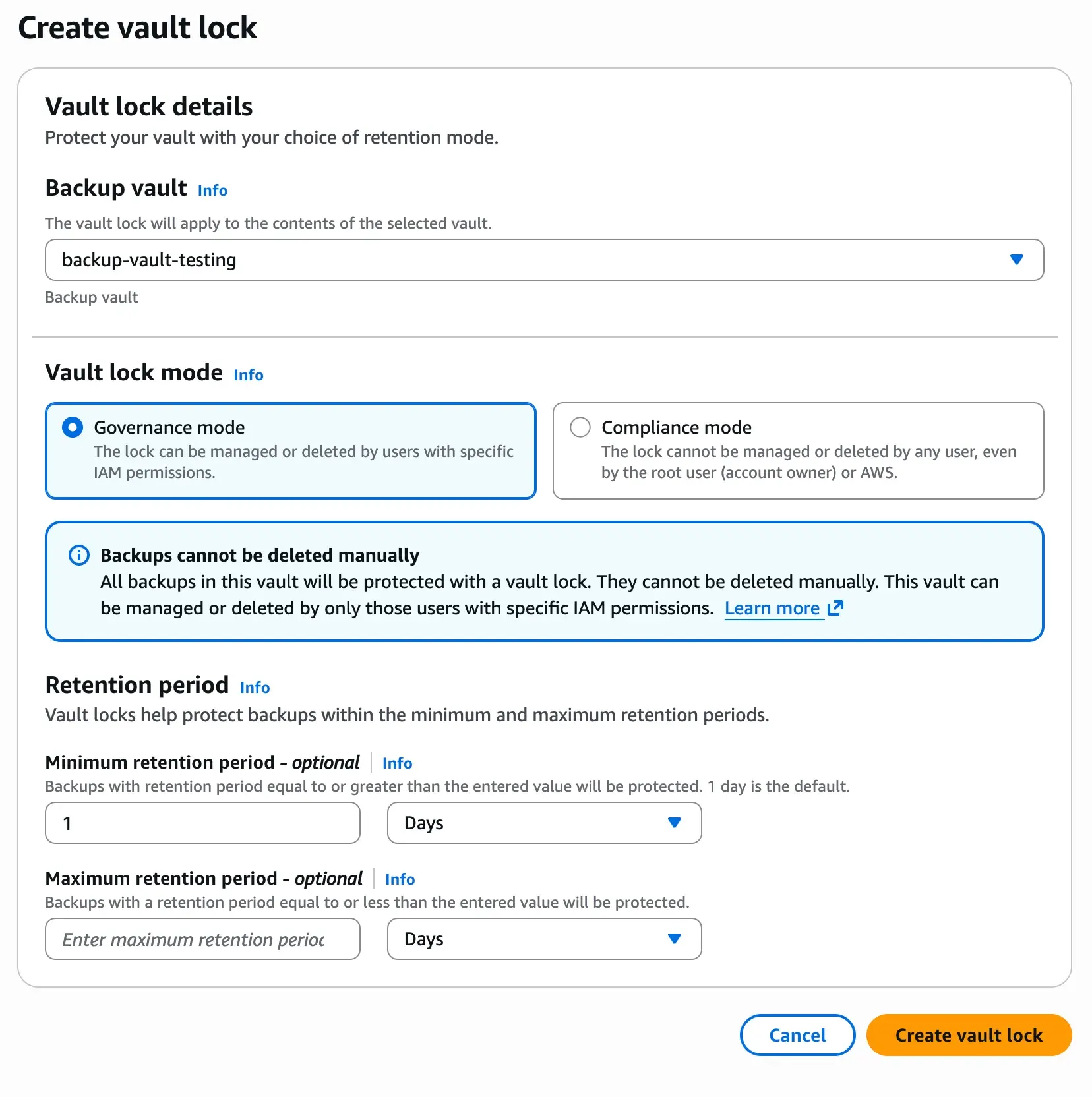

Once the vault is create, we can start to backup our data. One piece of configuration that we are waiting to configure is the lock options, because it requires a bit more explanation. Let’s see the both options:

Lock options: Governance mode vs Compliance mode.

As mentioned above, the backups taken by the services are immutable in the sense that the data of the recovery points can not be altered. But we can still with the right IAM permissions delete the recovery points. AWS offers us another layer of protection on our AWS Backups with two modes that prevent the management or deletion of the recovery points.

- Governance mode: This lock is applicable during the retention period that we indicate and prevents the management of the deletion of the vault unless we have specific IAM permissions.

- Compliance mode: Compliance mode is most secure mode. The vault is locked for any user, root user or even AWS during the retention period. This mode is the most secure layer, but it has hard implications, that no one can manage the vault. In case we make some configuration mistakes, AWS offers us the possibility to remove the lock. See the following screenshot:

Once the vault lock is created, our vault will have the retention periods we configured. It’s important to mentioned that if we enforced the lock, any recovery point or job with different retention period that we indicate here will fail.

Creating backup plans

Once we have the vault, it’s time to create our first backup plan. First step to create a backup plan is to give it a name. Let’s imagine the use cases that we want to create a daily backup and this daily backup we are going to configure to have a 30 days of retention period. Because we can have more than one plan, I will call this one: backup_daily_30_days_retention

Once we indicate the backup plan name, we can go to set up the rule configuration:

We need to select the vault where the backup should store the recovery points and the frequency we want. In coming articles I will explain about the logically air-gapped vault. The plans are regional, meaning that the vault that we created should be created on the same region of the recovery points that you are creating with the plan. I will explain later how you can synchronize your vaults from different regions.

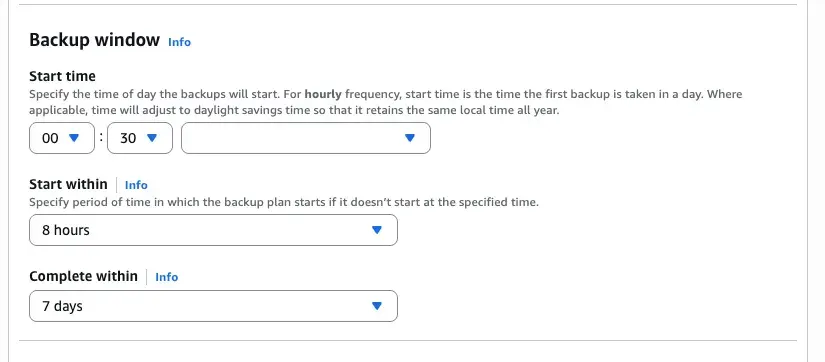

Now we can manage when the backup should start. AWS can not warranty that starts at the exact time, so we can configure also within a timeframe here the backup can start and we can indicate on how long the backup should be completed. Depending on the amount of resources that we expect to backup and the frequency of the backups is good taking these window options in considerations.

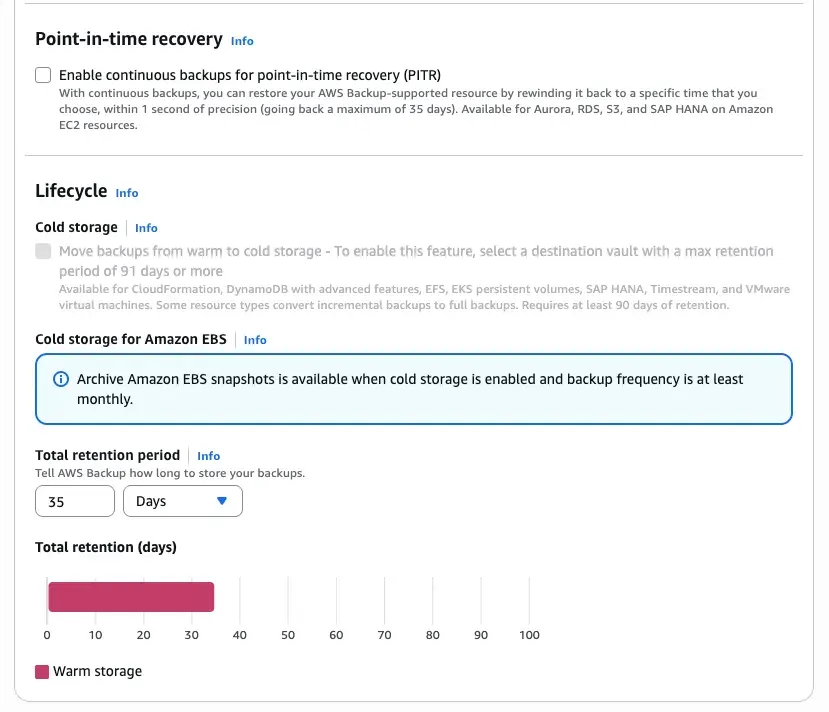

Finally two options that are only available for certain resources. For one hand we have point-in-time recovery. Using this option you are using a time machine where you go back to the moment you like of your recovery points with 1 second precision. If you choose this option your maximum retention period is the 35 days, so keep in mind that needs to be compatible with the retention periods of your vault. This feature is only supported for some services. For the sake of the example we are not going to use. The second feature is the cold storage. This is an option that help us with the cost management, because after 90 days the recovery points are move to a cold storage. In case of EBS backups you have the possibility to enrich the backup configurations with some options. Take in account that to use this options you need to setup your retention period of your vault as minimum 90 days and also in some resources every recovery point will contain a full backup. The total retention period of the recovery points in this case should also be as minimum of 90 days and maximum the max retention period of the vault. Other interesting features are:

- Backup indexes: only available for S3 and EBS. Every recovery point is indexed so it can search later.

- Scan recovery points with GuardDuty for malware: only for S3, EC2 and EBS.

- Advance settings like backup your ACLs and object tags when you are backing up S3 resources.

Assigning resources

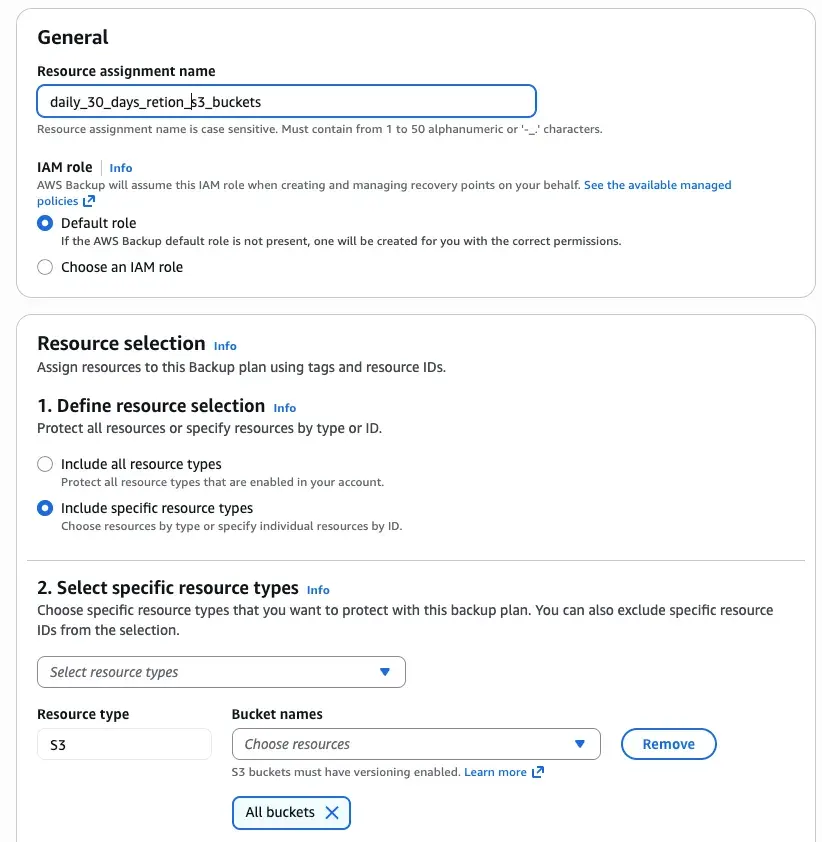

When we create the plan we have the option to assign resources. To our daily backup we are going to start backing up S3 buckets. So, after creating the plan on the console we will see the following screen:

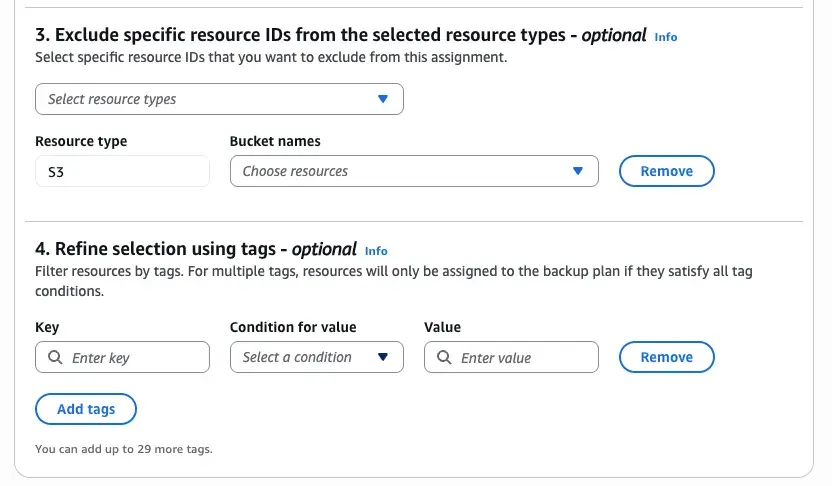

One resource assignment will contain one entry, so is it’s own entity. After the name we can select the role with the required permission we need, otherwise we can use one created by the service itself. And we can start with the resource selection. We can just select all the resources supported available on the account or we can select specific ones. In this case we select all the buckets on the account. Now is when things gets interesting. In many cases, we do not want to backup all S3 because they are not work to backup, for instance, temporary data, deployment assets that are sort live or other data for maintaining the account. So, in the next option we can select tags and conditions for its values that if that only when the condition met the S3 bucket is bucket. In AWS, the use of the tags is really important, so when you building your applications remember to add a tagging strategy to it. If you do not tag your resources from start, think about how to autotag them later. The good thing of tagging is that do not create infrastructure drifts, so you can use AWS Config or one custom autotagging solution even with resources that have been provisioned on stacks with cloudformation.

Create the resource assignments per supported services it gives the opportunity to the platform to make sure for instance to backup the supported services at this moment by the platform or not add resources that your platform never going to utilize.

Backup jobs and backups reports.

When our backup plans are execute, the service will generate a job per backup plan configuration we defined. If we follow the example that we created above, a backup job will be created for our S3 resources. We can see the executions on the AWS Backups → Job and after the Job is finished we can check the status of all the jobs on the AWS Backups → Dashboard → Jobs Dashboard. If we want to automate the monitoring of the backup jobs, for instance to be alerted when a backup job failed, then we can rely on Amazon EventBridge for that.